Why do small language models underperform? Studying Language Model Saturation via the Softmax Bottleneck

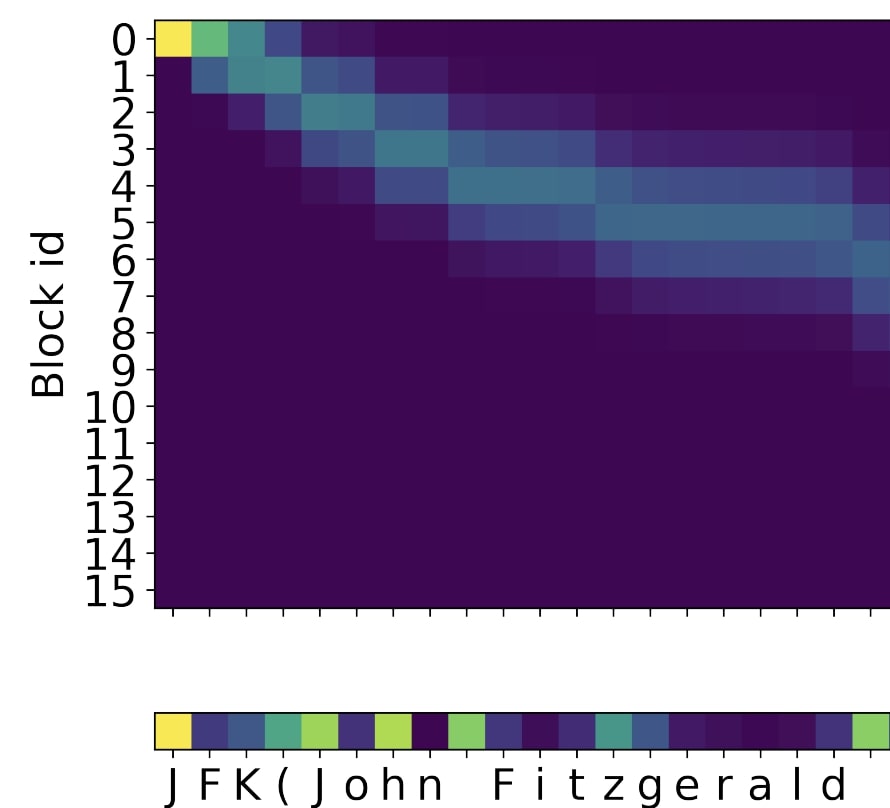

Small LM can saturate in performance during training because of the softmax bottleneck.

I am a postdoc in Yoav Artzi's lab at Cornell University. Before that, I obtained my PhD in the ALMAnaCH lab at Inria Paris, advised by Benoît Sagot and Éric de la Clergerie.

My research interests lie within the intersection of natural language processing and representation learning. I am specifically interested in understanding the representations of current language models, and studying how learning better representations can lead to better language models.

Small LM can saturate in performance during training because of the softmax bottleneck.

A simple token-level contrastive loss can replace cross-entropy and improve data and compute-efficiency for Masked and Causal LM training, especially when token vocabularies are larger.

We train a gradient-based neural tokenizer which learns segmentation softly, and improves robustness to misspellings and specific domains with atypical vocabularies.