MANTa: Efficient Gradient-Based Tokenization for End-to-End Robust Language Modeling

Last year, I got my first paper published as a findings at EMNLP 2022! It was a joint effort with Roman Castagné and was co-authored by my PhD supervisors Eric Villemonte de la Clergerie and Benoît Sagot from Inria’s ALMAnaCH team.

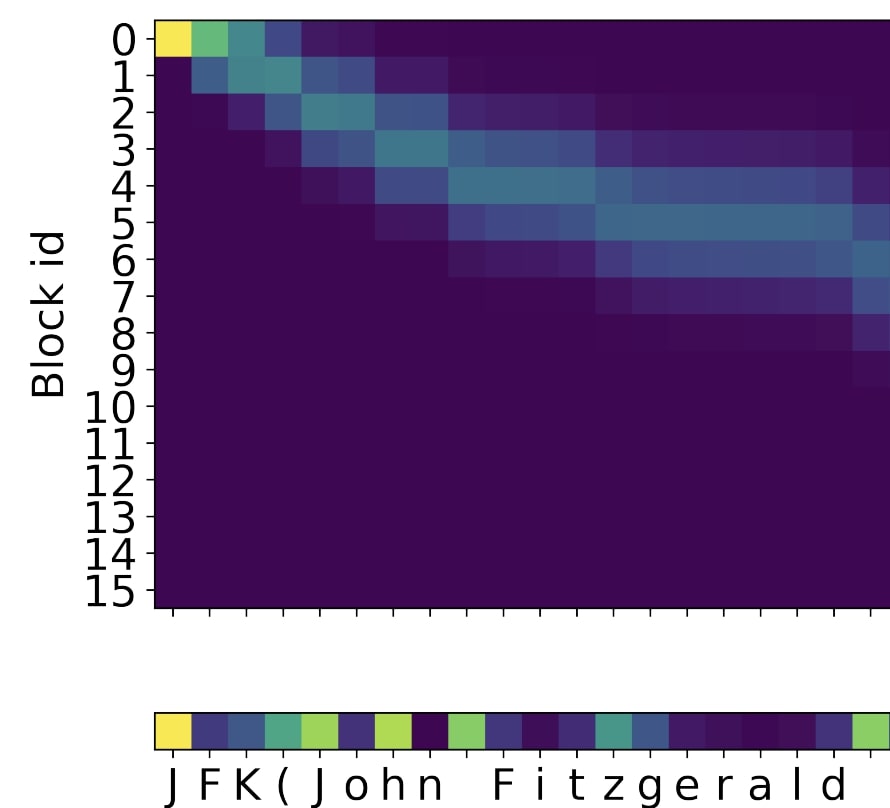

It introduces a differentiable tokenization that can be plugged to many language models to make end-to-end neural language modeling.

Here is the PDF version of the paper that you can also find here:

We also released our models’ weights and implementation on HuggingFace 🤗

Please cite as:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

@inproceedings{godey-etal-2022-manta,

title = "{MANT}a: Efficient Gradient-Based Tokenization for End-to-End Robust Language Modeling",

author = "Godey, Nathan and

Castagn{\'e}, Roman and

de la Clergerie, {\'E}ric and

Sagot, Beno{\^\i}t",

booktitle = "Findings of the Association for Computational Linguistics: EMNLP 2022",

month = dec,

year = "2022",

address = "Abu Dhabi, United Arab Emirates",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.findings-emnlp.207",

pages = "2859--2870",

}

This work was funded by the PRAIRIE institute as part of a PhD contract at Inria Paris and Sorbonne Université.

This post is licensed under CC BY 4.0 by the author.